Azure Synapse

Guide to integrate your Azure Synapse with Sprinkle

This page covers the details about integrating Azure Synapse with Sprinkle.

When setting up a Synapse connection, Sprinkle additionally requires Azure Storage Container. This guide covers the role of all the components and the steps to set up.

Integrating Synapse: All analytical data is stored and queried fromthe Synapse warehouse

Create Azure Storage Container: Sprinkle stores all intermediate data and report caches in this bucket

Step-by-step Guide for intergrating Synapse

STEP-1: Allow Synapse to accept connection from Sprinkle

Allow inbound connection on Synapse jdbc port (default is 1433) from Sprinkle IPs (34.93.254.126, 34.93.106.136).

STEP-2: Configure Synapse Connection

Log into Sprinkle application

Navigate to Admin -> Warehouse -> New Warehouse Connection

Select Azure Synapse

Provide all the mandatory details

Distinct Name: Name to identify this connection

Host: Provide IP address or Host name.

Port: Provide the Port number.

Database: Provide database name if there is any, it should be an existing database.

Username

Password

Server Type: You choose between Dedicated or Serverless.

External Data Source: This is only required for Serverless Server Type

Advanced Settings: It can be turned on/off based on the requirements. if yes:

Maximum Error Count: If the load returns the error_count number of errors or greater, the load fails. If the load returns fewer errors, it continues and returns an INFO message that states the number of rows that could not be loaded. Use this parameter to allow loads to continue when certain rows fail to load into the table because of formatting errors or other inconsistencies in the data. For reference, see this

Minimum Idle Connections: This property controls the minimum number of idle connections in the pool.

Maximum Pool Size: This property controls the maximum size that the pool is allowed to reach.

Test Connection

Create

Create Azure Storage Container

Create Azure Storage Container

Sprinkle requires an Azure Storage container to store intermediate data and report caches.

Note: If the warehouse is Azure Synapse, the Date Lake Storage which is configured for Synapse Analytics(during creation) should be used here.

Follow the below steps to create and configure storage container:

STEP-1: Create a Storage Account

Login to the Azure Portal and select All Services.

Select Storage Account and choose Add.

Select the subscription in which you want to create the account.

Select the desired resource group or create a new resource group. You can refer to the Azure Documentation of resource management.

Provide the storage name, this name should be unique across all the Azure storages. The name also must be between 3 and 24 characters in length, and may include only numbers and lowercase letters.

Select a location for your storage account, or use the default location.

Select a performance tier, recommended is Standard.

Set the Account kind field to Storage V2 (general-purpose v2).

Specify other settings if anything is required

Select Review + Create to review your storage account settings and create the account.

Click on Create, it will create the account

STEP-2: Create Storage Container

Login and go to the new storage account in the Azure portal.

In the left menu for the storage account, select Containers from the Blob service section.

Click on the + Container button.

Provide a name for your new container. The container name must be lowercase, must start with a letter or number, and can include only letters, numbers, and the dash (-) character. Refer to the Azure Documentation for more information.

Set the level of public access to the container or you can whitelist the Sprinkle IP shown in Driver Setup.

Select OK to create the container.

You can refer to the Azure quick start guide for Storage containers.

STEP-3: Get Access Key

Navigate to your storage account in the Azure portal.

Under Settings, select Access keys. Your account access keys appear, as well as the complete connection string for each key.

Locate the Key value under key1, and click the Copy button to copy the account key.

STEP-4: Configure Azure Storage connection in Sprinkle

Log into Sprinkle application

Navigate to Admin -> Warehouse -> New Warehouse Connection -> Add Storage

Select Azure Blob Store

Provide all the mandatory details

Distinct Name: Name to identify this connection

Storage Account Name: Created in STEP-1

Container Name: Created in STEP-2

Access Key: Copied in STEP-3

Test Connection

Create

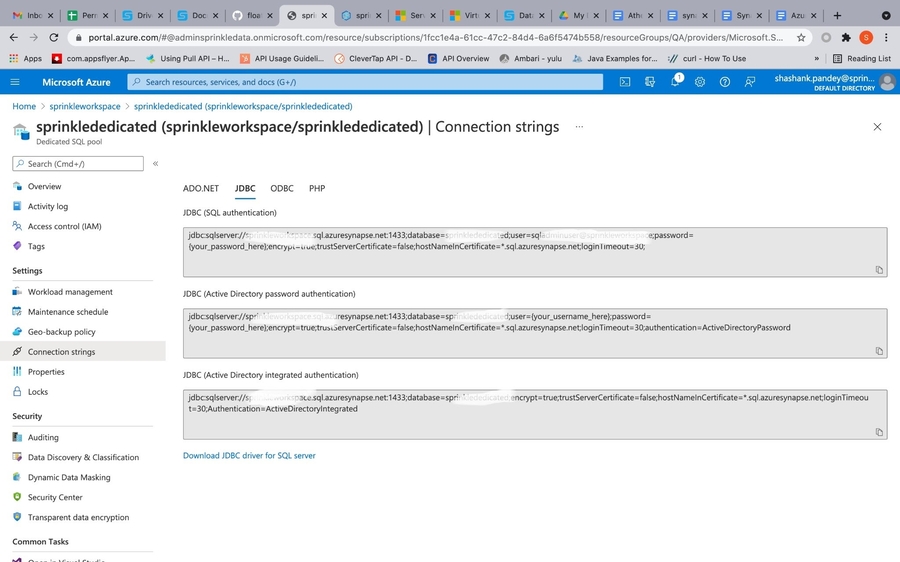

Get JDBC URL

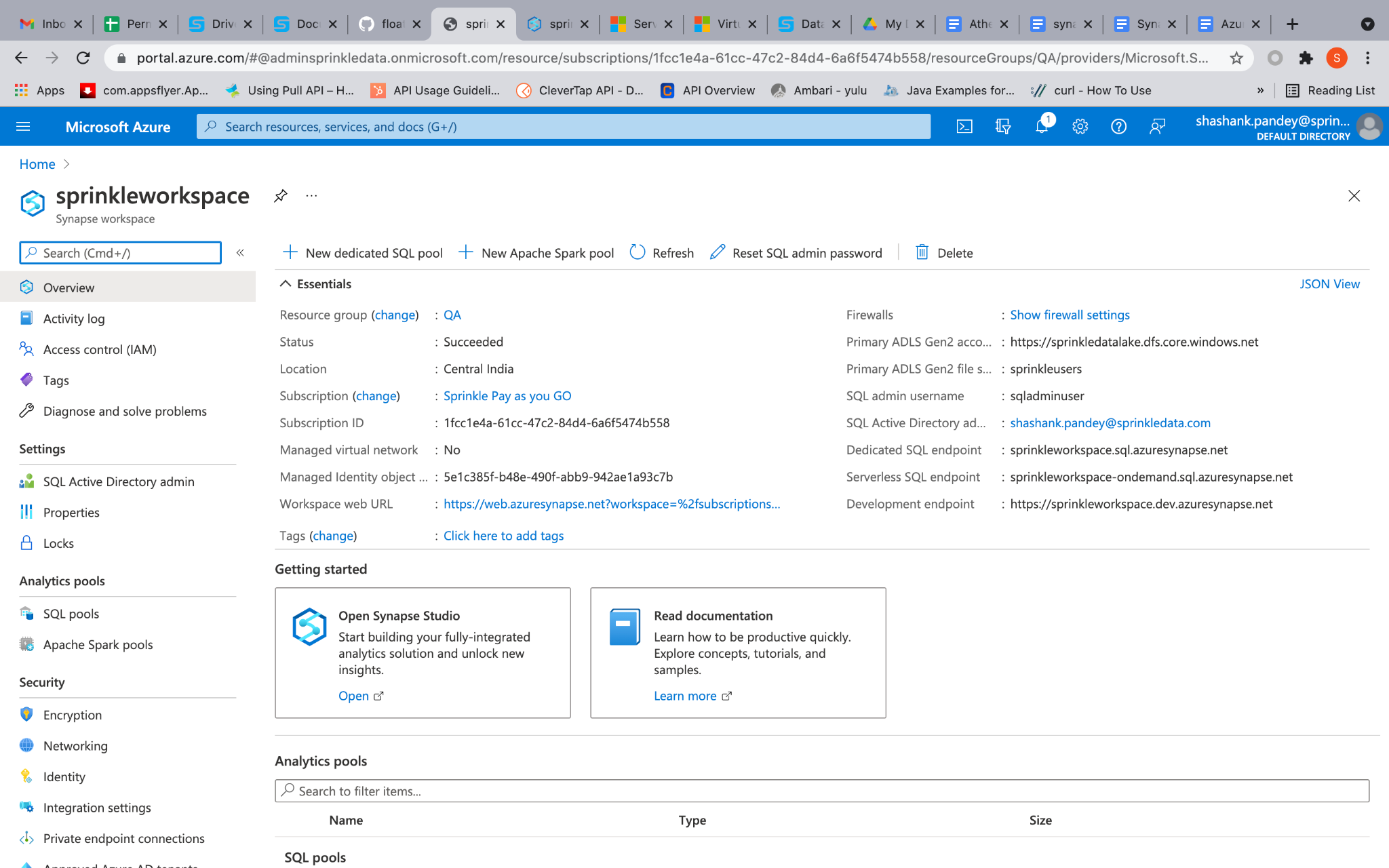

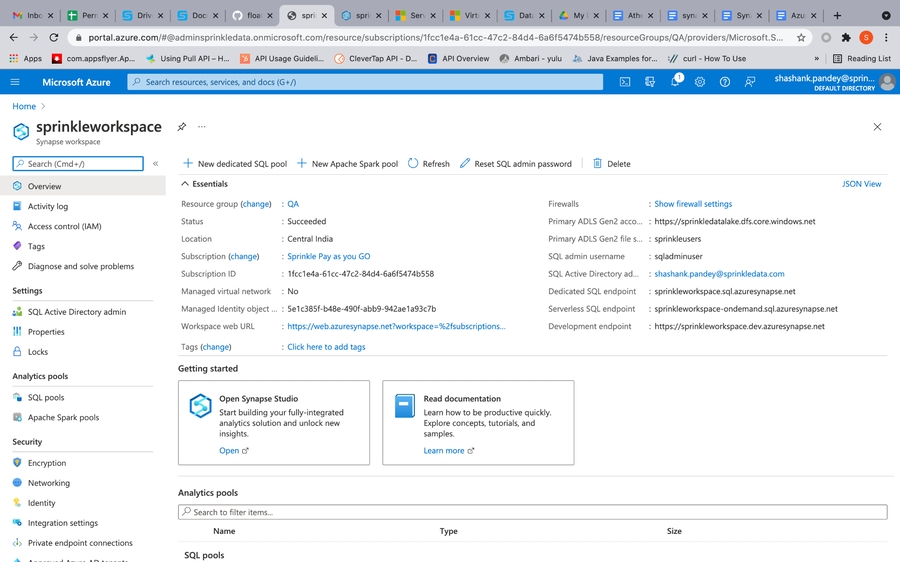

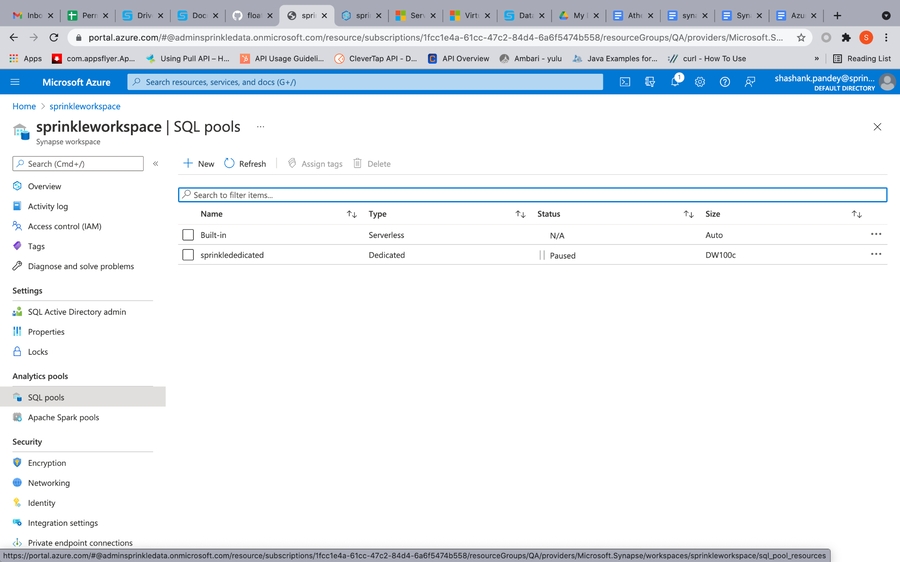

On the Azure portal go to synapse workspace, then on the left side under the Analytics pools tab select sql-pools.

Select the sql-pool for which you want to integrate to sprinkle.

On the left side under the Settings tab select connection strings. Select JDBC, here copy JDBC URL(remove username and password from JDBC URL).

If you don't have an existing Azure Synapse cluster

You can refer to the following/refer azure documentation.

Create an Azure Synapse Analytics workspace

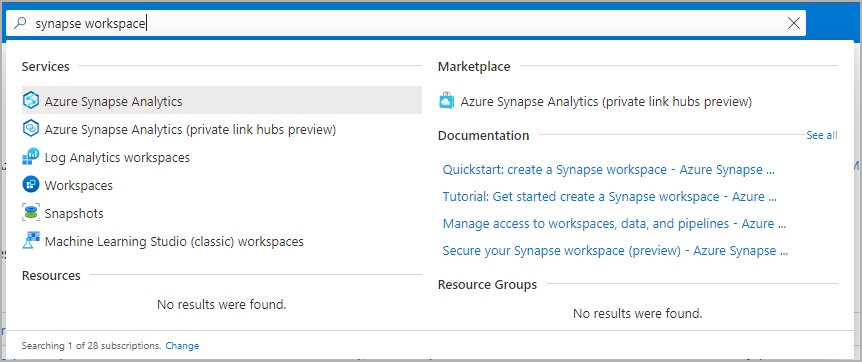

Open the Azure portal, and at the top, search for Synapse.

In the search results, under Services, select Azure Synapse Analytics.

Select Create to create a workspace.

On the Basics tab, give the workspace a unique name. We use mysworkspace in this document.

You need an Azure Data Lake Storage Gen2 account to create a workspace. The simplest choice is to create a new one. If you want to reuse an existing one, you need to perform extra configuration:

Option 1: Create a new Data Lake Storage Gen2 account:

Under Select Data Lake Storage Gen 2 > Account Name, select Create New. Provide a global unique name, such as contosolake.

Under Select Data Lake Storage Gen 2 > File system name, select File System and name it users.

Option 2: See the instructions in Prepare an existing storage account for use with Azure Synapse Analytics.

Your Azure Synapse Analytics workspace uses this storage account as the primary storage account and the container to store workspace data. The workspace stores data in Apache Spark tables. It stores Spark application logs under a folder named /synapse/workspacename.

Select Review + create > Create. Your workspace is ready in a few minutes.

Open Synapse Studio

After your Azure Synapse Analytics workspace is created, you have two ways to open Synapse Studio:

Open your Synapse workspace in the Azure portal. At the top of the Overview section, select Launch Synapse Studio.

Go to Azure Synapse Analytics and sign in to your workspace.

Prepare an existing storage account for use with Azure Synapse Analytics

Open the Azure portal.

Go to an existing Data Lake Storage Gen2 storage account.

Select Access control (IAM).

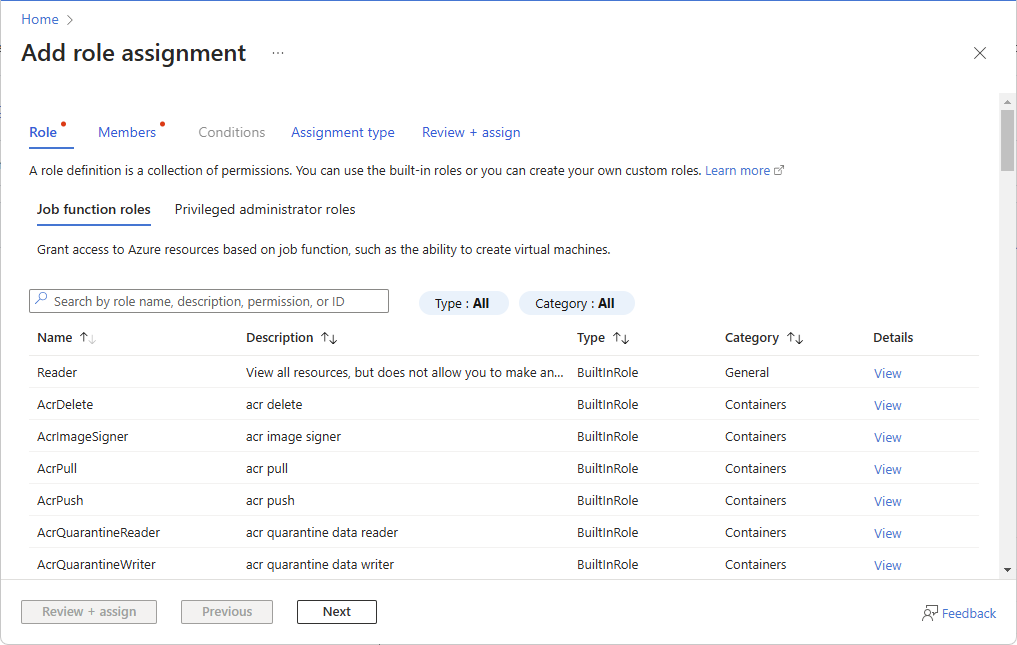

Select Add > Add role assignment to open the Add role assignment page.

Assign the following role. For more information, see Assign Azure roles by using the Azure portal.

Expand table

SettingValueRole

Owner and Storage Blob Data Owner

Assign access to

USER

Members

Your user name

Screenshot that shows the Add role assignment page in Azure portal. On the left pane, select Containers and create a container.

You can give the container any name. In this document, we name the container users.

Accept the default setting Public access level, and then select Create.

Configure access to the storage account from your workspace

Managed identities for your Azure Synapse Analytics workspace might already have access to the storage account. Follow these steps to make sure:

Open the Azure portal and the primary storage account chosen for your workspace.

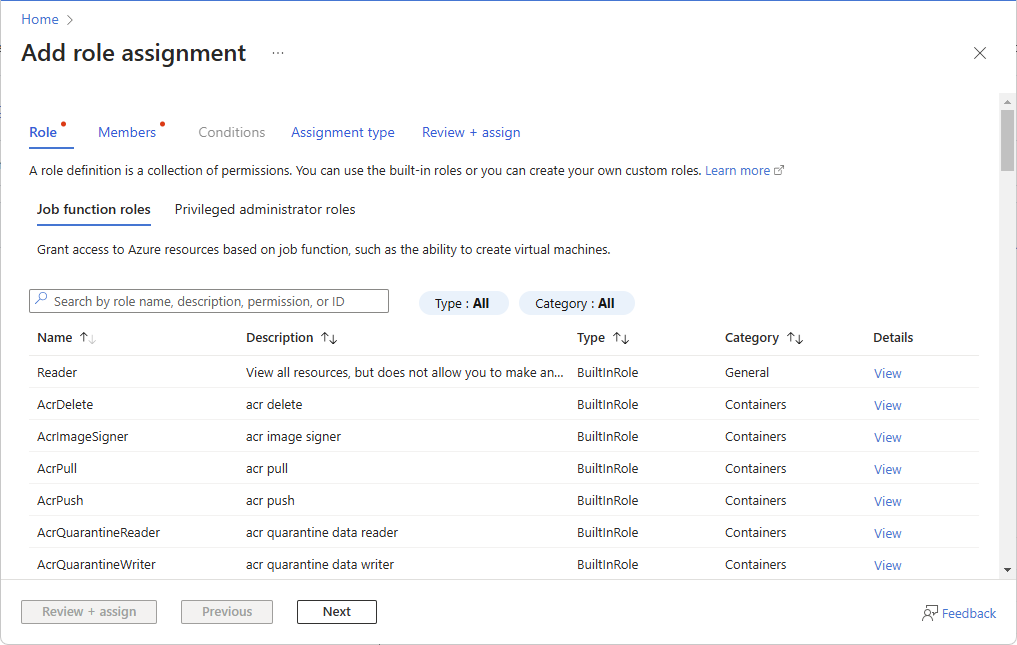

Select Access control (IAM).

Select Add > Add role assignment to open the Add role assignment page.

Assign the following role. For more information, see Assign Azure roles by using the Azure portal.

SettingValueRole

Storage Blob Data Contributor

Assign access to

MANAGEDIDENTITY

Members

myworkspace

Note: The managed identity name is also the workspace name.

Screenshot that shows the Add role assignment pane in the Azure portal. Select Save.

Create a serverless SQL pool while setting up the warehouse

You can refer to the following/refer docs

Create a new serverless Apache Spark pool using the Azure portal

Azure Synapse Analytics offers various analytics engines to help you ingest, transform, model, analyze, and distribute your data.

An Apache Spark pool provides open-source big data computing capabilities.

After you create an Apache Spark pool in your Synapse workspace, data can be loaded, modelled, processed, and distributed for faster analytic insight.

Here, you'll learn how to use the Azure portal to create an Apache Spark pool in a Synapse workspace.

Prerequisites

You'll need an Azure subscription. If needed, create a free Azure account

You'll be using the Synapse workspace.

Sign in to the Azure portal

Sign in to the Azure portal

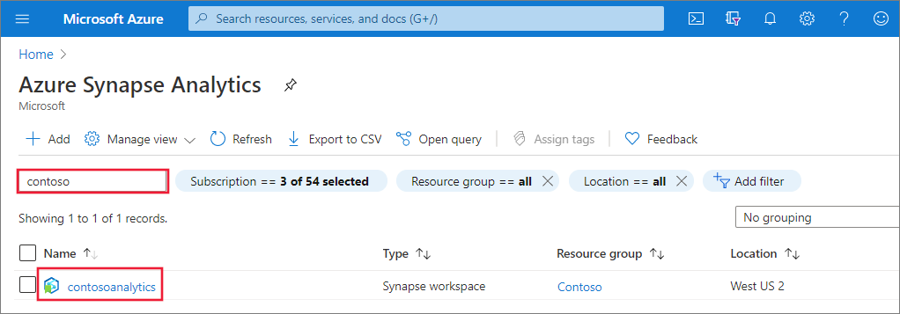

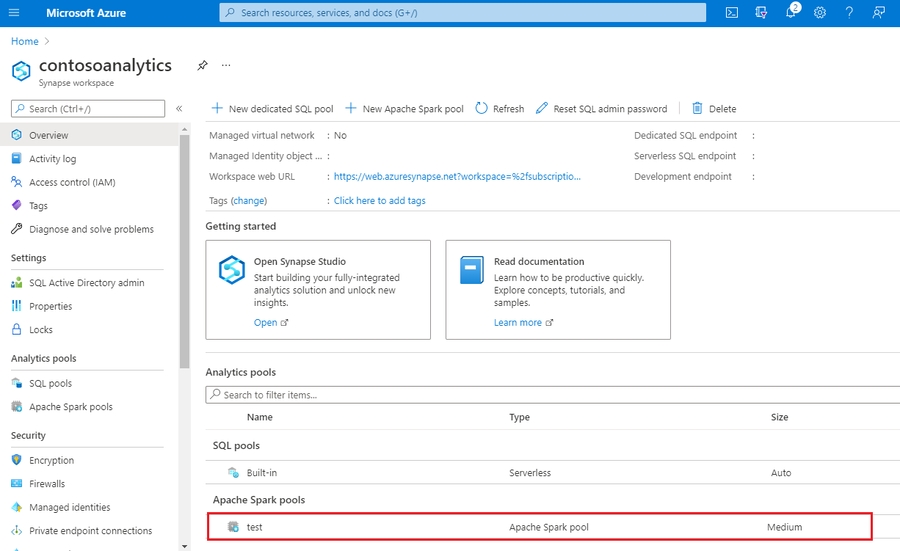

Navigate to the Synapse workspace

Navigate to the Synapse workspace where the Apache Spark pool will be created by typing the service name (or resource name directly) into the search bar.

From the list of workspaces, type the name (or part of the name) of the workspace to open. For this example, we use a workspace named contosoanalytics.

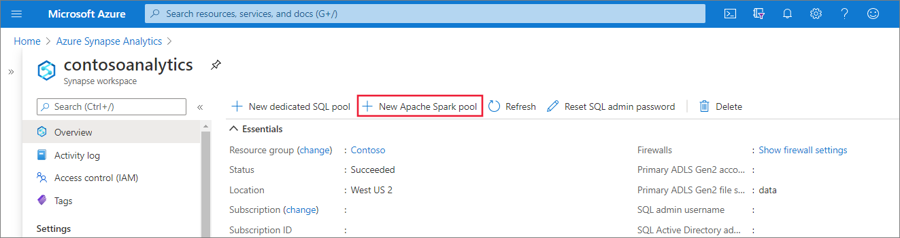

Create new Apache Spark pool

In the Synapse workspace where you want to create the Apache Spark pool, select New Apache Spark pool.

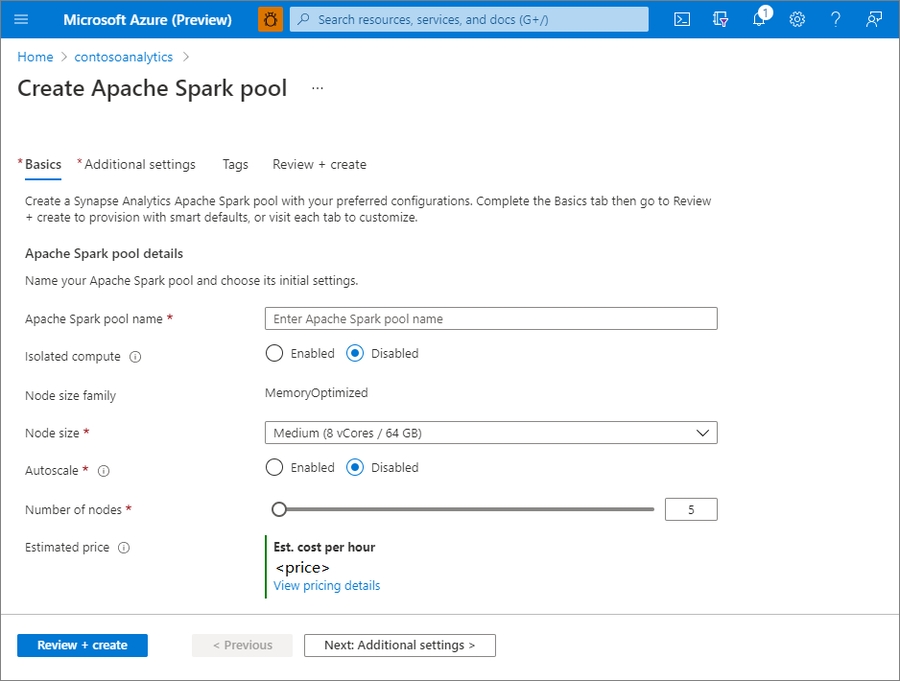

Enter the following details in the Basics tab:

Important: There are specific limitations for the names that Apache Spark pools can use. Names must contain letters or numbers only, must be 15 or less characters, must start with a letter, not contain reserved words, and be unique in the workspace.

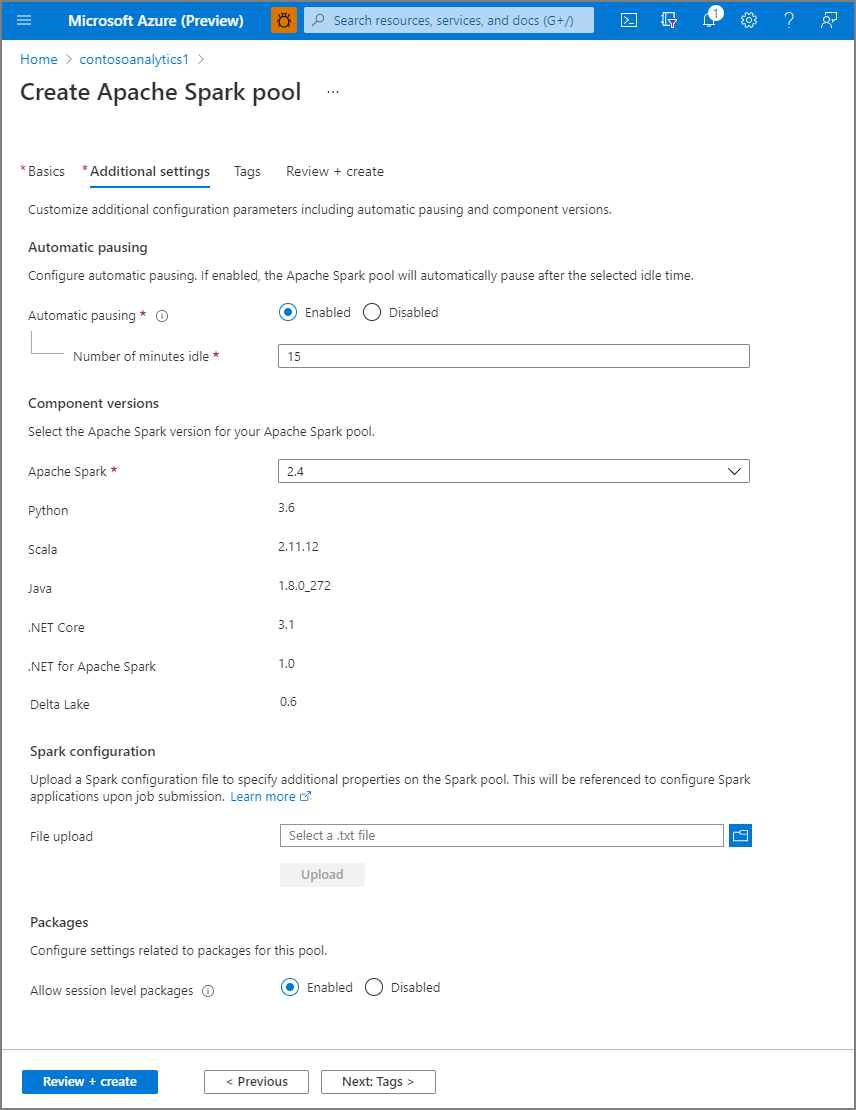

Select Next: additional settings and review the default settings. Don't modify any default settings.

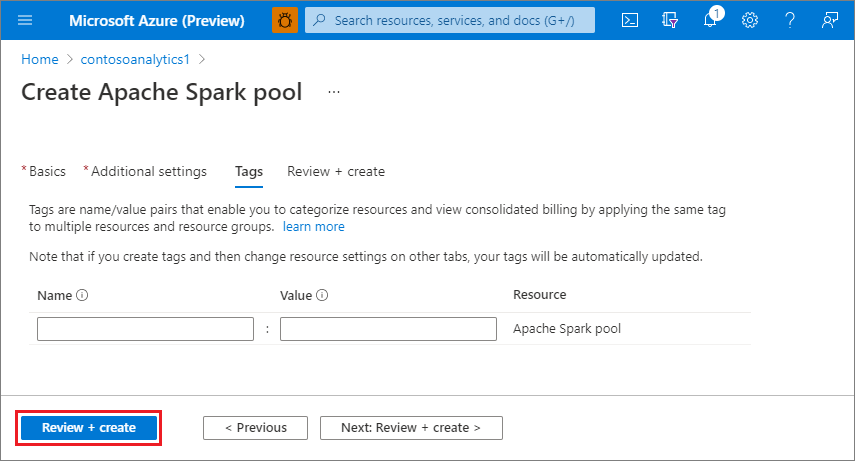

Select Next: tags. Consider using Azure tags. For example, the "Owner" or "CreatedBy" tag to identify who created the resource, and the "Environment" tag to identify whether this resource is in Production, Development, etc. For more information, see Develop your naming and tagging strategy for Azure resources.

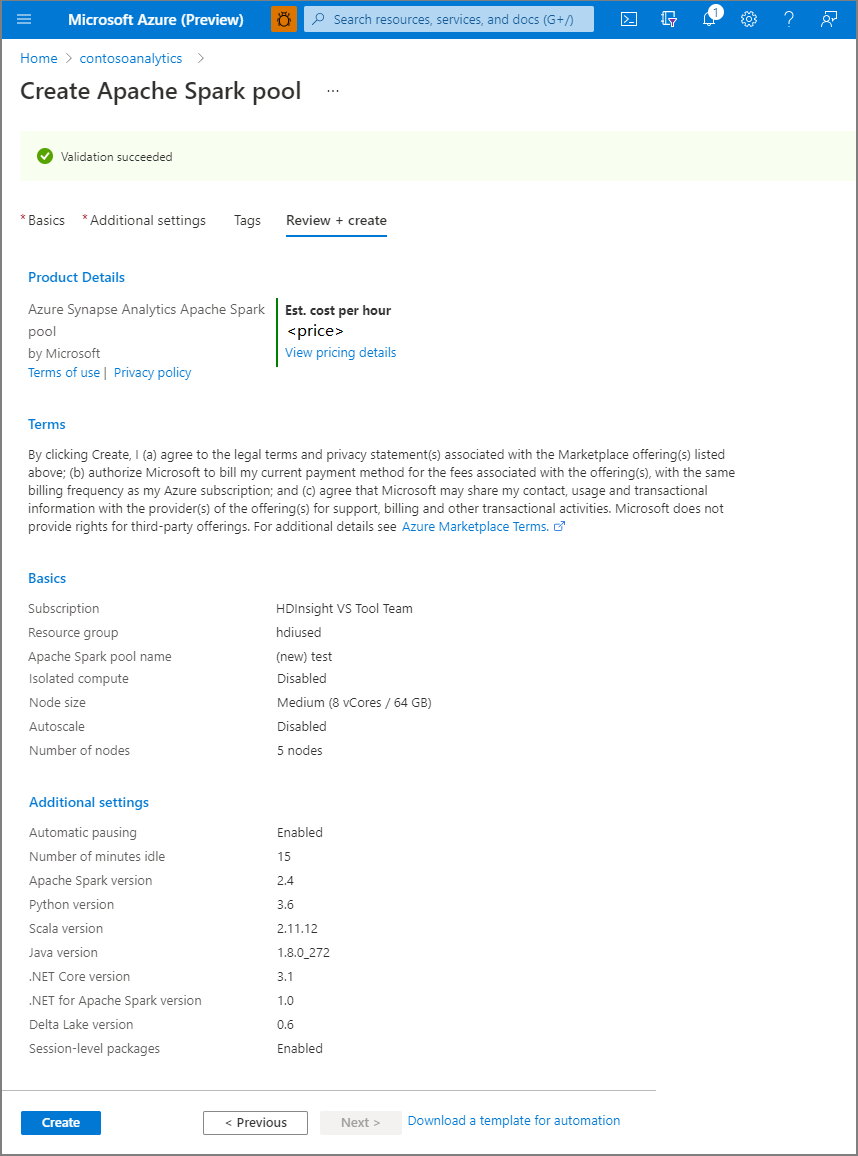

Select Review + create.

Make sure that the details look correct based on what was previously entered, and select Create.

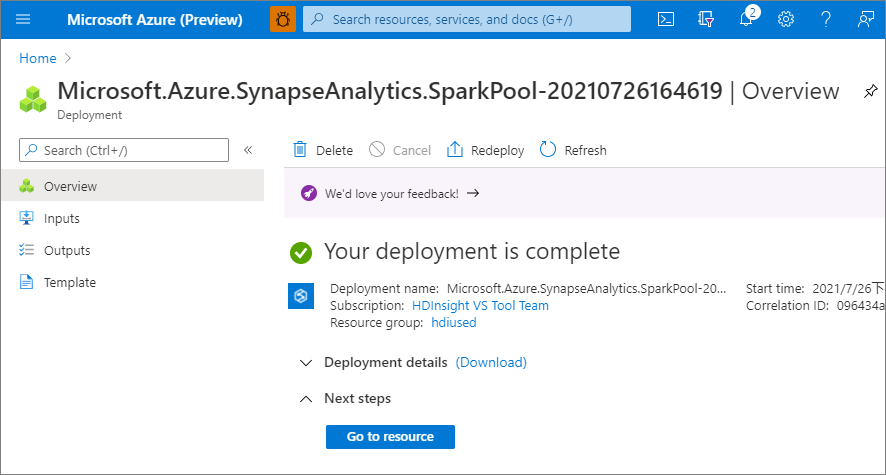

At this point, the resource provisioning flow will start, indicating once it's complete.

After the provisioning completes, navigating back to the workspace will show a new entry for the newly created Apache Spark pool.

At this point, there are no resources running, no charges for Spark, you have created metadata about the Spark instances you want to create.

External data source creation

Create a new database which will be configured in Sprinkle:

Need to use credential of Data Lake Storage Gen2 which is select during creation of Synapse Analytics.

In Azure Portal on home page select storage then go to Security + Networking then go to Shared Access Signature

Now select checkbox for Allowed resource types and provide start and end expiry date

Click on Generate SAS and connection string.

Copy the SAS token and use this in the below query:

Last updated